Introduction to EiQ

In previous blogs, we’ve discussed in depth Edge AI, its features, advantages, and future scopes. Implementation of Edge AI is what we’ll study in this blog.

AI has played a critical role in driving innovation across various industries. Advances in vision and voice technologies continue to foster the development of large intelligent models, resulting in new use cases and improved user experiences. Hence there is a growing demand for AI that can run on edge devices with MCUs and MPUs.

On NXP EdgeVerse MCUs and MPUs, a range of machine learning algorithms can be utilised with NXP’s EiQ artificial intelligence (AI) software development environment. This includes neural network compilers, inference engines, optimised libraries, and an ML workflow toolkit called the eiq toolkit.

It enables developers to efficiently build, optimize, and deploy AI and ML algorithms on NXP’s microcontrollers (MCUs) and microprocessors (MPUs), specifically targeting embedded edge devices across industries like automotive, industrial, healthcare, and consumer electronics.

What is NXP's EiQ Toolkit?

The NXP® eIQ® artificial intelligence (AI) software development environment enables the use of ML algorithms on NXP microcontrollers and microprocessors, including i.MX RT crossover MCUs, IMX family application processors and S32K3 MCU’s

eIQ AI software includes a ML workflow tool called eIQ Toolkit, along with inference engines, neural network compilers and optimized libraries.

Now let’s breakdown each of the above keyword’s highlighted in depth to understand what exactly is eIQ AI toolkit.

( These keyword’s should be well explained in previous edge ai blog)

ML Workflow tool

- At first, ML workflow tool, which mean’s the systematic process for developing, training, evaluating and deploying machine learning models It encompasses a series of steps that guide practitioners through the entire lifecycle of a machine learning project from problem defination to solution deployment.

- Refernece: What Is a Machine Learning Workflow? | Pure Storage

- So eiQ toolkit is an ML work-flow tool for NXP MCUs.

Inference Engines

An inference engine is a software runtime environment that executes a trained and compiled machine learning model to produce predictions (also called inferences) based on new input data.

- An inference engine is the runtime brain of an AI-enabled system — executing trained models in real-world environments by processing input data and returning intelligent decisions, in real time.

It is the final stage in the ML lifecycle — where models are put to real use in applications, devices, or systems.

Yes, an inference engine is essentially a specialized software stack — designed to execute trained ML models efficiently on target hardware.

Why eIQ?

Modern smart products need to process information fast, securely and without connecting to internet. Edge AI gives this advantage by running ML models on the device, esuring:

- Lower latency : faster responses.

- Greater privacy: since data never leaves the device, privacy is ensured.

- Reliability: edge Ai devices work even without cloud access.

Key Features and Components

- Inference Engines: Eiq supports mainstream engines such as TensorFlow Lite, Arm NN, and OpenCV, enabling deployment and acceleration of various deep learning models on supported hardware.

- Neural Network Compilers: eiq converts and optimises neural network models for target NXP edge devices.

- Model Zoo: It is a repository/collection of pre-trained models ready for deployment and finetuning. These models are used by developers to be trained or finetuned on custom dataset, to make them suitable for the desired application and integrate into hardware.

- Optimised Libraries: Hardware specific libraries for Arm Cortex A and Cortex M architectures, are provided in eiq, hence maximising the inferencing speed and efficiency.

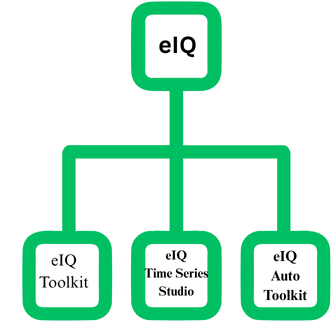

eIQ tools and Components

- eIQ Toolkit : consists of workflow and visualization tools for end to end ML model development and deployment. It includes eIQ portal GUI and command line tools to make this task of development and deployment feasible and easily understandable.

- eIQ Time Series Studio: Time series data is a sequence of data points recorded at consistent, evenly spaced intervals over time. eIQ time series studio consists of an automated ML workflow tool for building and deploying time-series-based ML models to MCU-class devices.

- eIQ Auto Toolkit: Deep learning toolkit for S32 automotive processors, enables automotive-grade inference engines and compliance with automotive safety standards.

The eIQ workflow

Following is the explanation of workflow from Idea to Deployment.

- Data Collection: Gather and prepare your data—images, sounds, or sensor readings.

Model Selection: Choose from pre-trained models or import your own from frameworks like TensorFlow or PyTorch.

Optimization: Use eIQ’s tools to convert and fine-tune models so they run efficiently on your target hardware.

Testing: Simulate and evaluate your models locally, tweaking parameters for the best results.

Deployment: Flash the optimized model onto your device—supporting MCUs, MPUs, or even hardware NPUs for extra acceleration.

Integration: Access robust example code and SDK integrations for a smooth start.

Common Applications

- Computer Vision : Object Detection, image classification, gesture recognition.

- Voice Recognition: wake-word detection and command processing.

- Sensor Analytics: Real-time monitoring, anomaly detection, and equipment diagnostics.

Supported Hardware and Ecosystem

- i.MX RT Series: used for applications likr Embedded vision and robotics.

- i.MX 8 , 8X, 8M: used for applications like smart home, industry IoT.

- S32 Processors: used for application like automotive AI, safety etc.

eIQ Model Zoo

The NXP eIQ® Model Zoo offers pre-trained models for a variety of domains and tasks that are ready to be deployed on supported products.

Models are included in the form of “recipes” that convert the original models to TensorFlow Lite format. This allows users to find the original re-trainable versions of the models, allowing fine-tuning/training if required.

✅ Yes, the eIQ Model Zoo is used in the Model Development stage. It provides pre-trained models so you can skip training and go straight to compiling and deploying.

You can use these models:

As-is (if they fit your use case)

Or fine-tune/retrain them (transfer learning) before compiling

TensorFlow Lite is an inference engine!!!